Imagine you have a super-smart robot friend who helps answer all sorts of questions you have. You can ask your robot friend something, and it will give you an answer based on what you asked. This is kind of like what a Large Language Model (LLM) does.

So, when you ask your robot friend different things, it makes sense that you’ll get different answers, right? But, did you know, if you ask the same question in different ways, you might get different answers too! It’s like baking; the ingredients (your questions) and how you mix them (how you ask) can make a big difference in the cake (the answer) you get.

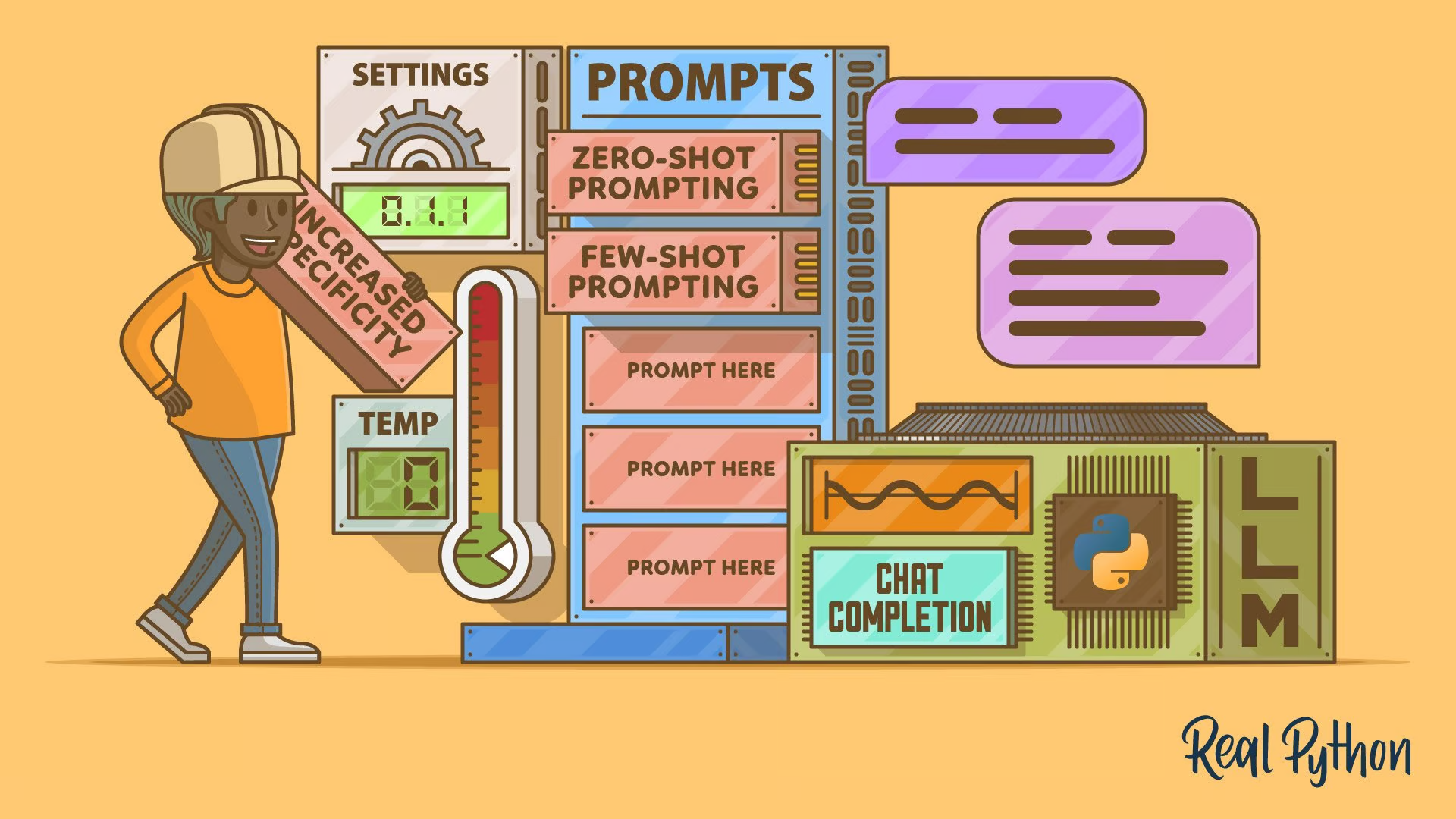

So, when you’re talking to your robot friend (using an LLM), thinking about how you ask something is super important—it’s like giving your friend hints to understand better what you are looking for. This is called “prompt engineering”.

Knowing how to ask things well can help get clearer and more helpful answers. For example, one way to ask is by giving examples of what you mean, which is like saying, “You know when this happens, what should I do?” This is called “few-shot prompting”.

Another cool way is “chain-of-thought prompting”. This is when you ask your robot friend to explain their thoughts step by step. It’s like when you ask someone how they solved a math problem, and they explain each step. This can help get even better answers!

I hope this helps with understanding how asking questions in different ways can get all kinds of different answers from our smart robot friend (aka LLM)!

About the Author

- Amir Aryanihttps://aigraph.researchgraph.org/blogs/author/amir/13 November 2023

- Amir Aryanihttps://aigraph.researchgraph.org/blogs/author/amir/6 November 2023

- Amir Aryanihttps://aigraph.researchgraph.org/blogs/author/amir/27 October 2023

- Amir Aryanihttps://aigraph.researchgraph.org/blogs/author/amir/29 September 2023